Review: thread, thread block and grid

Defining the shape of

a grid or a (thread) block

- CUDA provides the

dim3

data type

to allow the

programmer to

define the

shape of the

execution configuration

Syntax:

dim3 myShape = dim3( xDim, yDim, zDim );

|

- I will discuss the

general usage of

dim3 in the

next set of

slides...

- In

this set of

slides, I will

discussed

a simplified shape:

- The

1-dimensional

execution configuration

|

|

The 1-dimensional execution configuration

- An 1-dimensional

execution configuration is

defined using the following

syntax:

<<< NBlocks, NThreads >>>

NBlocks = # blocks in the grid

NThreads = # threads in each (thread) block

|

Examples:

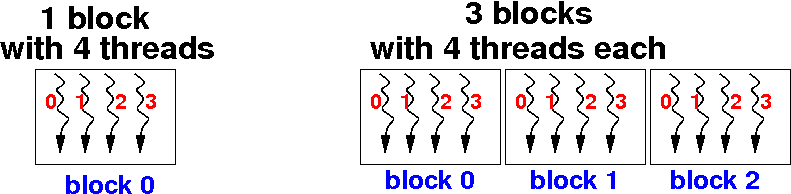

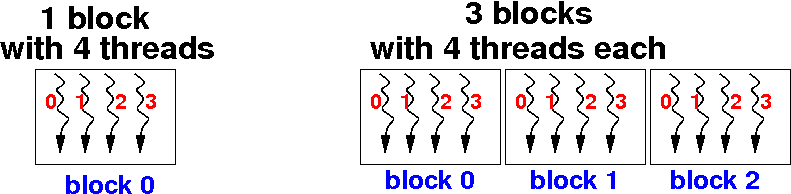

<<< 1, 4 >>>: use 1 block, with 4 threads in each block

<<< 3, 4 >>>: use 3 blocks,with 4 threads in each block

|

|

How each

thread in the grid can

create a

unique identifier for

itself

- CUDA provide

the following built-in variables

to allow

each thread to

identify

its position within

the grid:

gridDim = # blocks in the grid

blockIdx = index of a block within the grid

blockDim = # threads in the block

threadIdx = index of a thread within its block

|

- Each of these

(4) variables have

3 components:

.x = the value in the first dimension

.y = the value in the second dimension

.z = the value in the third dimension

|

|

I will illustrate their

use using the first dimension

in the next slides

How each

thread in the grid can

create a

unique identifier for

itself -

DEMO

The following

hello program

makes each thread print of

its own set of identifying variables:

#include <stdio.h>

#include <unistd.h>

__global__ void hello( )

{

printf("gridDim.x=%d, blockIdx.x=#%d,

blockDim.x=%d, threadIdx.x=#%d\n",

gridDim.x, blockIdx.x, blockDim.x, threadIdx.x);

}

int main()

{

hello<<< 2, 4 >>>( );

printf("I am the CPU: Hello World ! \n");

cudaDeviceSynchronize();

}

|

DEMO:

/home/cs355001/demo/CUDA/1-intro/hello-thrIndex.cu

How each

thread in the grid can

create a

unique identifier for

itself

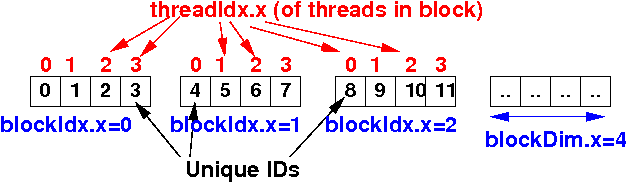

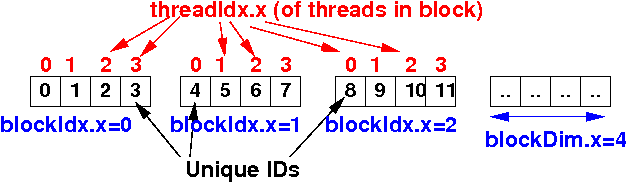

- We can use the

built-in variables

to compute

a

unique identifier

for

each thread as follows:

uniqueID = blockIdx.x × blockDim.x + threadIdx.x

gridDim.x = # blocks in the grid (in the first dimension)

blockIdx.x = block index of the (current) thread in grid

blockDim.x = # threads in (each of) the (thread) block

(in the first dimension)

threadIdx.x = thread index of the (current) thread in block

gridDim.x = # blocks in the grid (in the first dimension)

blockIdx.x = block index of the (current) thread in grid

blockDim.x = # threads in (each of) the (thread) block

(in the first dimension)

threadIdx.x = thread index of the (current) thread in block

|

|

How each

thread in the grid can

create a

unique identifier for

itself -

DEMO

The following

hello program

makes each thread print its

unique ID:

#include <stdio.h>

#include <unistd.h>

__global__ void hello( )

{

printf("gridDim.x=%d, blockIdx.x=#%d,

blockDim.x=%d, threadIdx.x=#%d -> ID=%d of %d\n",

gridDim.x, blockIdx.x, blockDim.x, threadIdx.x,

blockIdx.x*blockDim.x+threadIdx.x,

gridDim.x*blockDim.x);

}

int main()

{

hello<<< 2, 4 >>>( );

printf("I am the CPU: Hello World ! \n");

cudaDeviceSynchronize();

}

|

DEMO:

/home/cs355001/demo/CUDA/1-intro/hello-thrIndex2.cu

❮

❯

gridDim.x = # blocks in the grid (in the first dimension)

blockIdx.x = block index of the (current) thread in grid

blockDim.x = # threads in (each of) the (thread) block

(in the first dimension)

threadIdx.x = thread index of the (current) thread in block

gridDim.x = # blocks in the grid (in the first dimension)

blockIdx.x = block index of the (current) thread in grid

blockDim.x = # threads in (each of) the (thread) block

(in the first dimension)

threadIdx.x = thread index of the (current) thread in block