This set of slides go into more details on the GPU architecture made by NVidia:

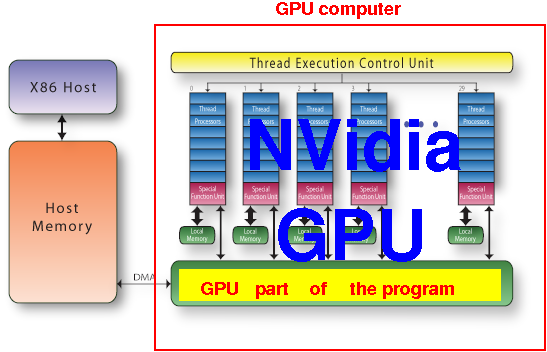

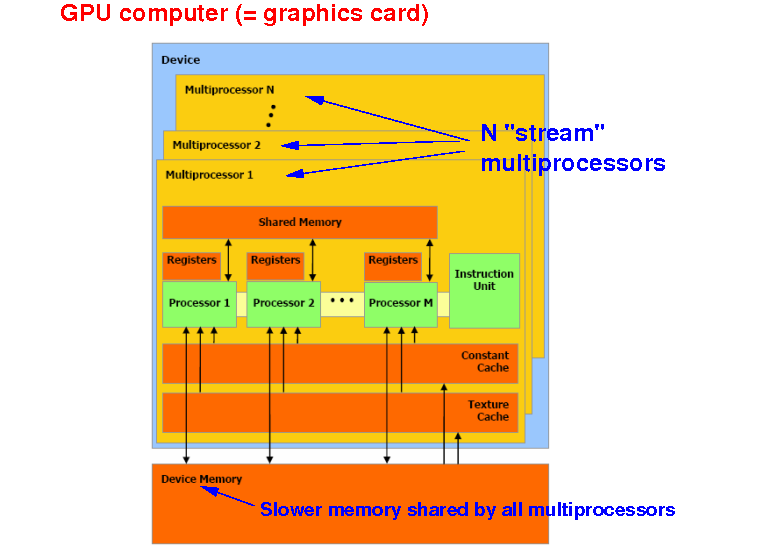

The NVidia GPU consists of N "stream" multiprocessors that can access a shared device memory:

The device memory can be accessed by all multiprocessors (i.e.: a shared memory)

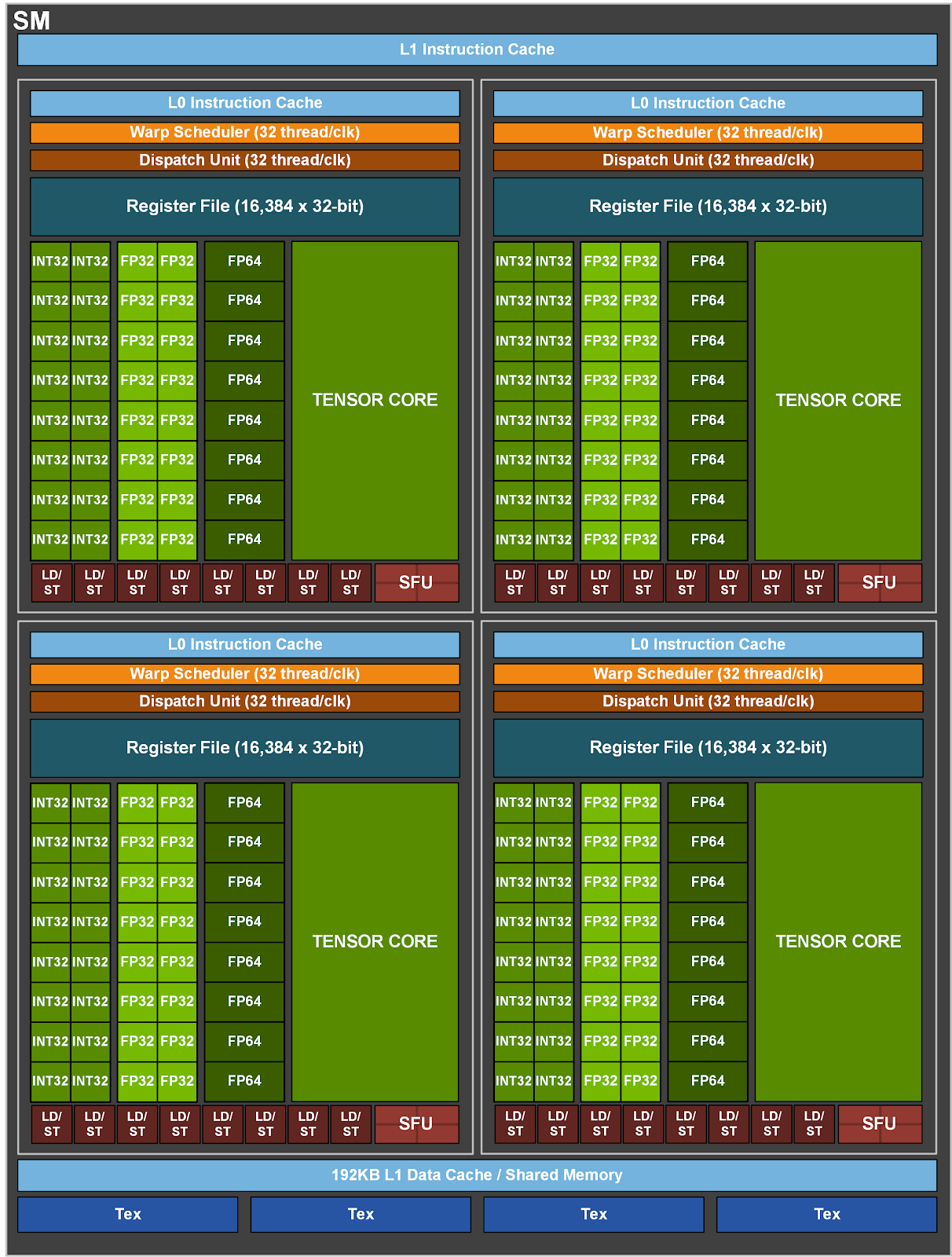

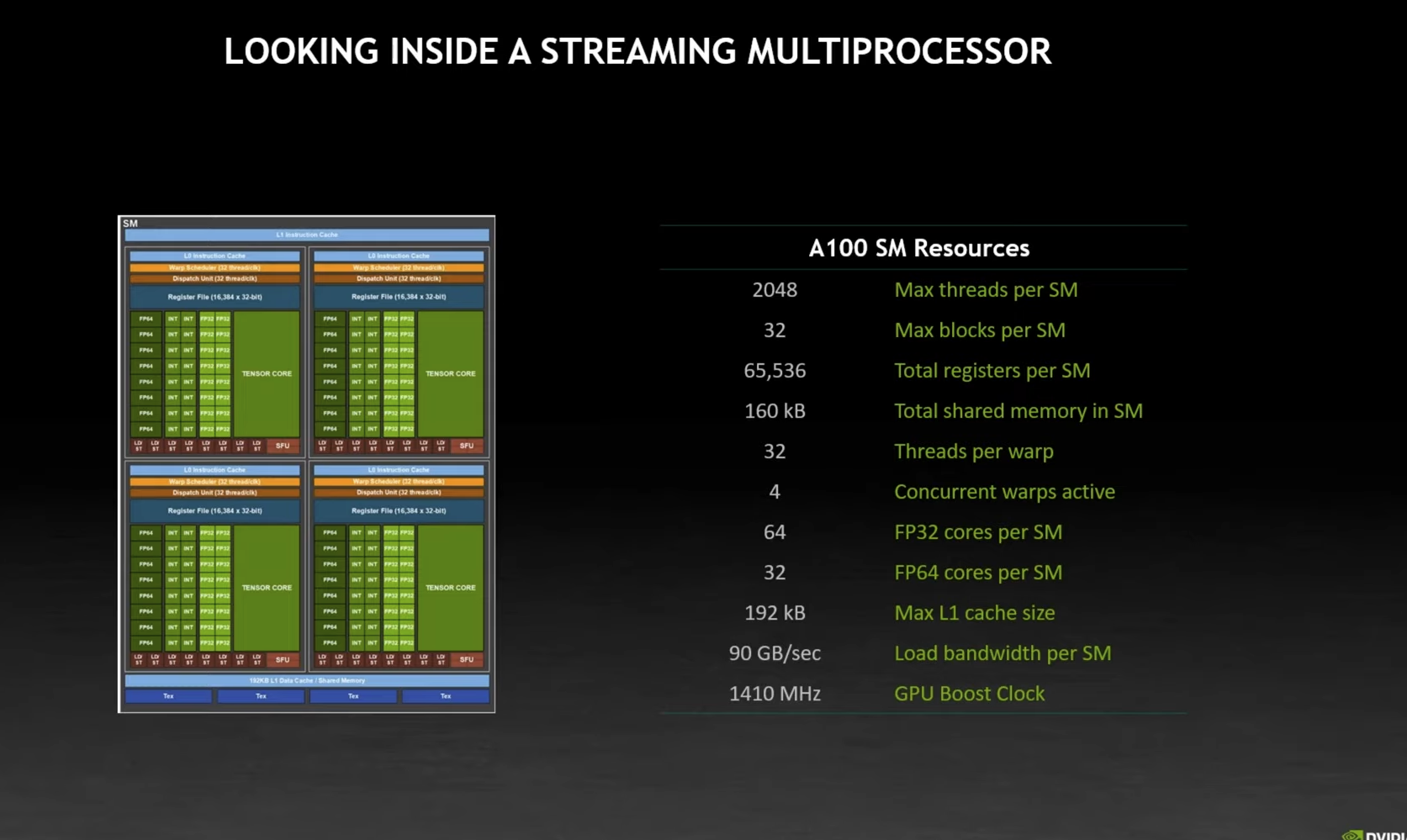

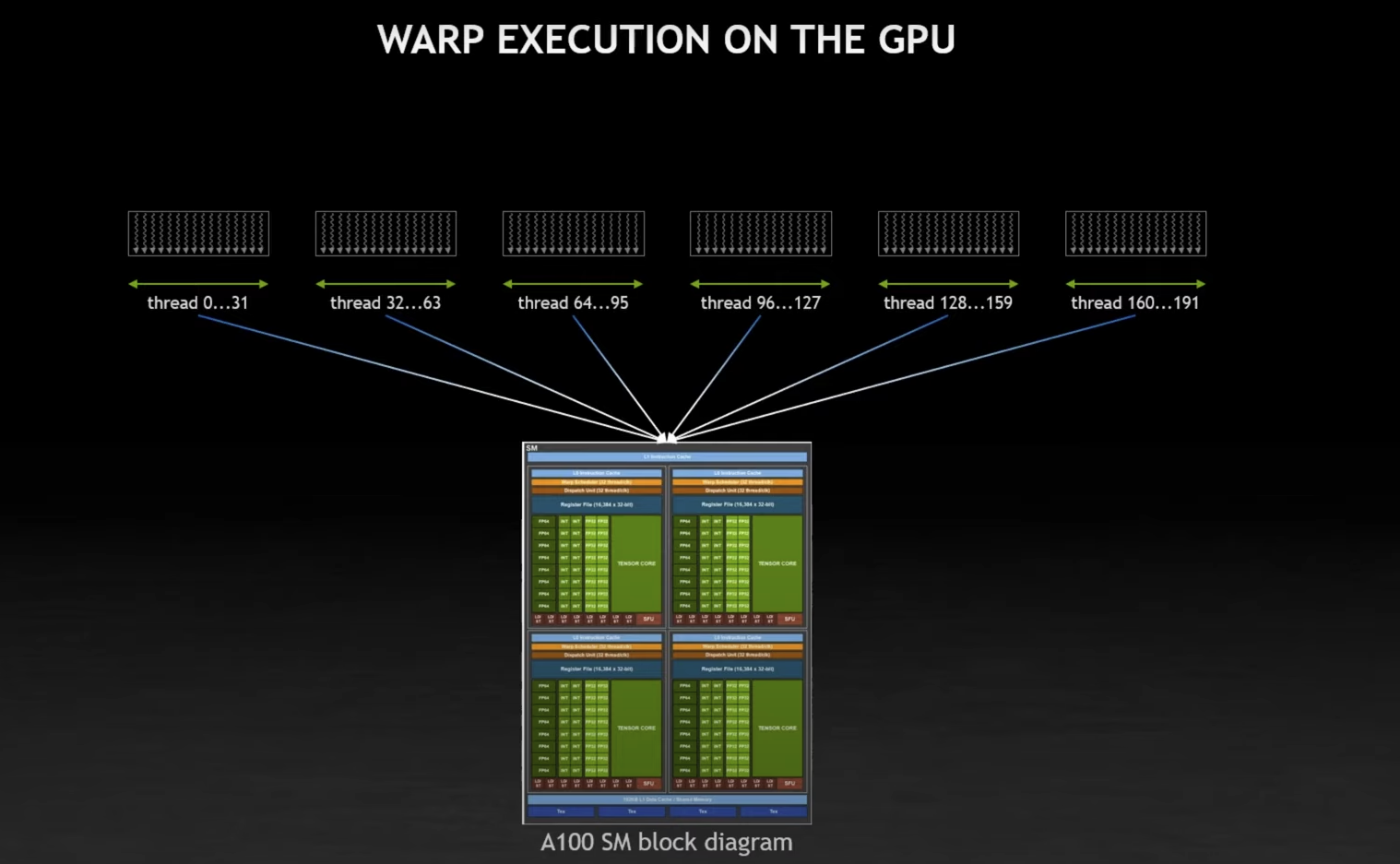

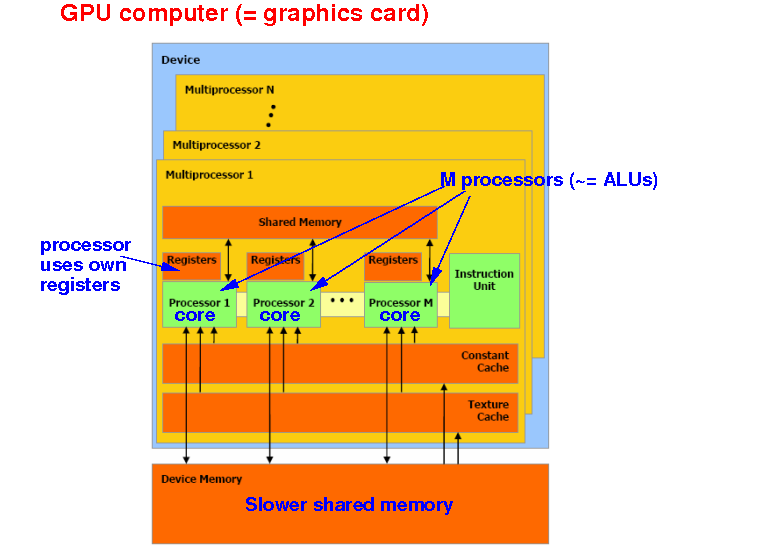

Each multiprocessor has M processors (a.k.a. CUDA cores ~= ALUs):

Note:

a processor or

"CUDA core" =

floating point unit

(comparable to an ALU)

( click here

and

click here)

Each processor

or CUDA core (= ALU) uses

its own

registers

|

|

|

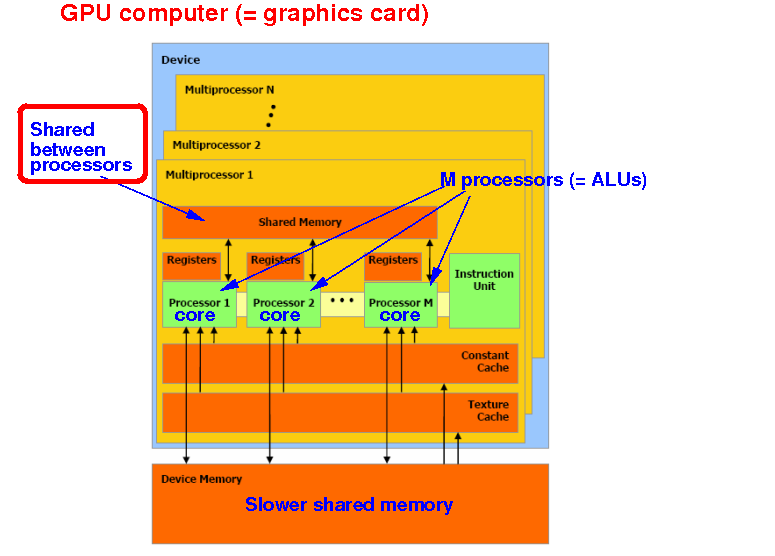

All cores in the same multiprocessor can also access a (faster) shared memory:

This shared memory enables threads (= programs) running on CUDA cores to communicate with one another

|