|

Recall the Hello World CUDA program:

#include <stdio.h> // C programming header file

#include <unistd.h> // C programming header file

/* ------------------------------------

Your first kernel (= GPU function)

------------------------------------ */

__global__ void hello( )

{

printf("Hello World !\n"); // You see this msg 4 times...

}

int main()

{

hello<<< 1, 4 >>>( ); // launch kernel

cudaDeviceSynchronize();

printf("I am the CPU: Hello World ! \n");

}

|

For our demo, I will rename the kernel function to: addOne( )

__global__ void addOne( ) { printf("Hello World !\n"); } int main() { addOne<<< 1, 4 >>>( ); // launch kernel cudaDeviceSynchronize(); printf("I am the CPU: Hello World ! \n"); } |

The addOne( ) function will add 1 to the same variable x...

We create a shared variable x between the CPU and GPU:

__managed__ int x; __global__ void addOne( ) { printf("Hello World !\n"); } int main() { addOne<<< 1, 4 >>>( ); // launch kernel cudaDeviceSynchronize(); printf("I am the CPU: Hello World ! \n"); } |

Initialize the shared variable x to 0 (zero):

__managed__ int x; __global__ void addOne( ) { printf("Hello World !\n"); } int main() { x = 0; addOne<<< 1, 4 >>>( ); // launch kernel cudaDeviceSynchronize(); printf("I am the CPU: Hello World ! \n"); } |

The GPU function Make addOne( ) will add 1 to its x (and print a message on what it did):

__managed__ int x; __global__ void addOne( ) { printf("Thread %d: Adding 1 to x\n", threadIdx.x); x = x + 1; } int main() { x = 0; addOne<<< 1, 4 >>>( ); // launch kernel cudaDeviceSynchronize(); printf("Main after addOne(): x = %d\n", x\n"); } |

DEMO: /home/cs355001/demo/CUDA/5-add-common-var/add-common1.cu

Output of the demo program:

cs355@ghost01 (841)> add-common1

Main before addOne(): x = 0

Thread 0: Adding 1 to x

Thread 1: Adding 1 to x

Thread 2: Adding 1 to x

Thread 3: Adding 1 to x

Main after addOne(): x = 1

|

In other words: the 4 threads has each performed x = x + 1 once

We expected that x is increased by 4 !!!

However, x is increased by 1 only !!!

Explanation of this inconsistent behavior:

|

Explanation of this inconsistent behavior --- recall that:

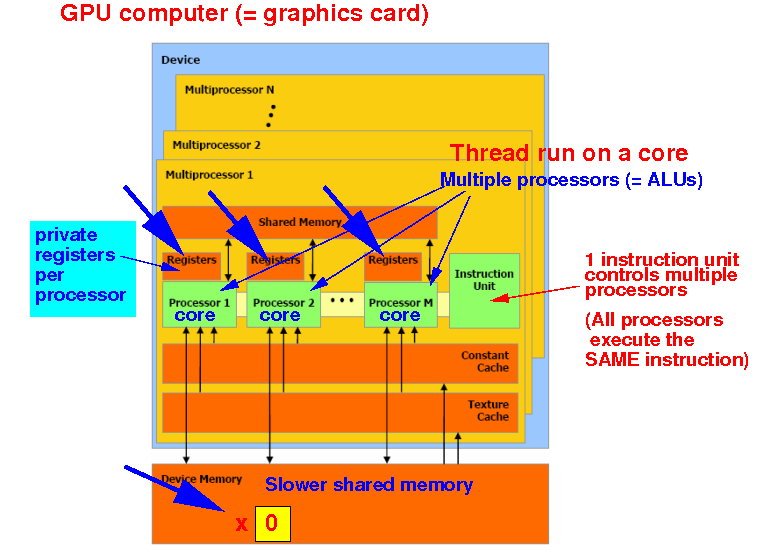

Threads executes on CUDA cores and each core has its own registers !!!

Explanation of this inconsistent behavior

Let's execute the GPU program using 2 (simultaneously running) threads:

2 threads executes: x = x + 1 Initially: x = 0 Thread 1 Thread 2 ============ =========== ldr R0,x ldr R0,x add R0,R0,#1 add R0,R0,#1 str R0,x str R0,x |

Explanation of this inconsistent behavior

Let's execute the GPU program using 2 (simultaneously running) threads:

2 threads executes: x = x + 1 Initially: x = 0 Thread 1 Thread 2 ============ =========== ldr R0,x (Thr 1's R0 = 0) ldr R0,x (Thr 2's R0 = 0) add R0,R0,#1 add R0,R0,#1 str R0,x str R0,x |

Notice that both thread will set: R0 = 0 !!!

Explanation of this inconsistent behavior

Let's execute the GPU program using 2 (simultaneously running) threads:

2 threads executes: x = x + 1 Initially: x = 0 Thread 1 Thread 2 ============ =========== ldr R0,x (Thr 1's R0 = 0) ldr R0,x (Thr 2's R0 = 0) add R0,R0,#1 (Thr 1's R0 = 1) add R0,R0,#1 (Thr 2's R0 = 1) str R0,x (Thr 1 set x= 1) str R0,x (Thr 2 set x= 1) |

Notice that both thread will increase R0 to 1 !!!

Explanation of this inconsistent behavior

Let's execute the GPU program using 2 (simultaneously running) threads:

2 threads executes: x = x + 1 Initially: x = 0 Thread 1 Thread 2 ============ =========== ldr R0,x (Thr 1's R0 = 0) ldr R0,x (Thr 2's R0 = 0) add R0,R0,#1 (Thr 1's R0 = 1) add R0,R0,#1 (Thr 2's R0 = 1) str R0,x (Thr 1 set x= 1) str R0,x (Thr 2 set x= 1) |

Notice that both thread will update x to 1 !!!

Therefore: unsynchronized simultaneous updates to the same variable results in "missing" updates

|

Consider the previous CUDA program where 4 threads simultaneously add 1 to the var x:

__managed__ int x; __global__ void addOne( ) { printf("Thread %d: Adding 1 to x\n", threadIdx.x); x = x + 1; } int main() { x = 0; addOne<<< 1, 4 >>>( ); // launch kernel cudaDeviceSynchronize(); printf("Main after addOne(): x = %d\n", x\n"); } |

We change it to use atomicAdd(x, 1) to add 1 atomically:

__managed__ int x; __global__ void addOne( ) { printf("Thread %d: Adding 1 to x\n", threadIdx.x); atomicAdd(&x, 1); } int main() { x = 0; addOne<<< 1, 4 >>>( ); // launch kernel cudaDeviceSynchronize(); printf("Main after addOne(): x = %d\n", x\n"); } |

DEMO: /home/cs355001/demo/CUDA/5-add-common-var/add-common2.cu

Output of the demo program:

cs355@ghost01 (841)> add-common2

Main before addOne(): x = 0

Thread 0: Adding 1 to x

Thread 1: Adding 1 to x

Thread 2: Adding 1 to x

Thread 3: Adding 1 to x

Main after addOne(): x = 4

|

In other words: the 4 threads has each performed x = x + 1 once

We expected that x is increased by 4 !!!

It is now correct !!!