Review: the matrix multiplication operation

+- -+ +- -+

|A11 A12 A13| |B11 B12 B13|

A = |A21 A22 A23| B = |B21 B22 B23|

|A31 A32 A33| |B31 B32 B33|

+- -+ +- -+

Then:

+- -+

|C11 C12 C13|

C = A*B = |C21 C22 C23|

|C31 C32 C33|

+- -+

where:

Cij = ∑k=0..N-1 AikBkj

(for i = 0, 1, .., N-1 and j = 0, 1, ..., N-1)

|

The matrix multiplication with two (2) N×N matrices (C = A × B):

int N = ...; // Some pre-defined value

float A[N][N]; // Matrix 1

float B[N][N]; // Matrix 2

float C[N][N]; // Output matrix

//CPU matrix mult alg for static 2-dimensional arrays

for (int i = 0; i < N; i++)

for (int j = 0; j < N; j++)

{

/* ---------------------------------

Compute the matrix element

Cij = ∑k=0..N-1 AikBkj

--------------------------------- */

C[i][j] = 0.0;

for (int k = 0; k < N; k++)

C[i][j] = C[i][j] + A[i][k]*B[k][j]; // "inner" vector product

}

|

Limitations on using 2-dimensional arrays in C:

|

Better solution:

|

Problem: we

cannot

use expression

A[i][j]

(2 dim index) to

access the

allocated elements

We must use

A[i]

(1 dim index) !!!

The memory space allocated by the malloc( ) function:

|

The memory space

allocated by

malloc( ) has

a one-dimensional structure !!

(The memory cells are

consecutive in

memory, so they are

line up on

a "straight line")

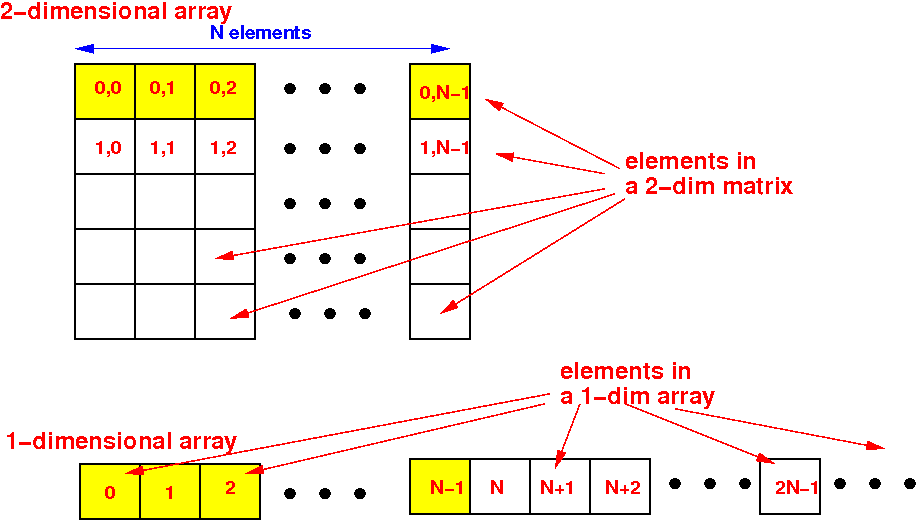

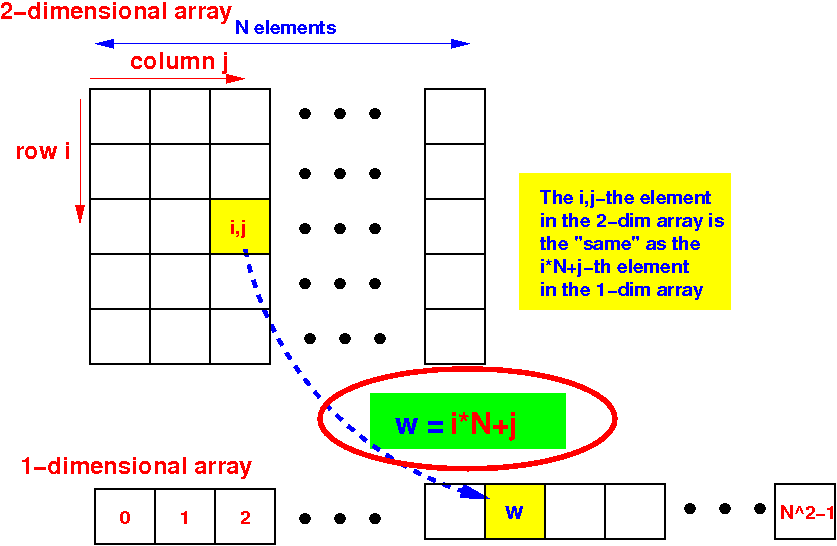

Mapping a 2-dimensional matrix to a 1-dimensional array (allocated by malloc( )):

|

We will discuss the mapping function next

Mapping a 2-dimensional matrix to a 1-dimensional array (allocated by malloc( )):

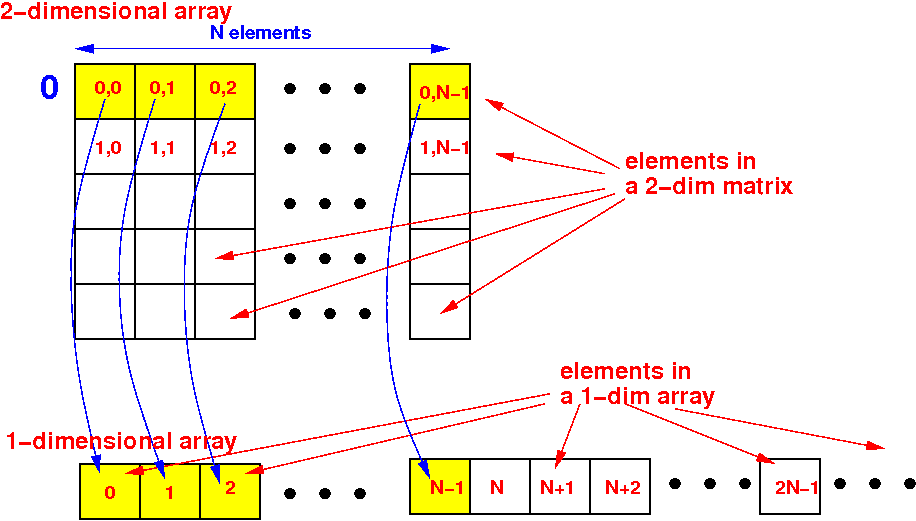

We will map the matrix elements row-wise onto the 1-dim array next

We map the 1st row of the matrix to the first N elements in 1-dim array:

Relationship: w (1-dim index) = 0×N + j

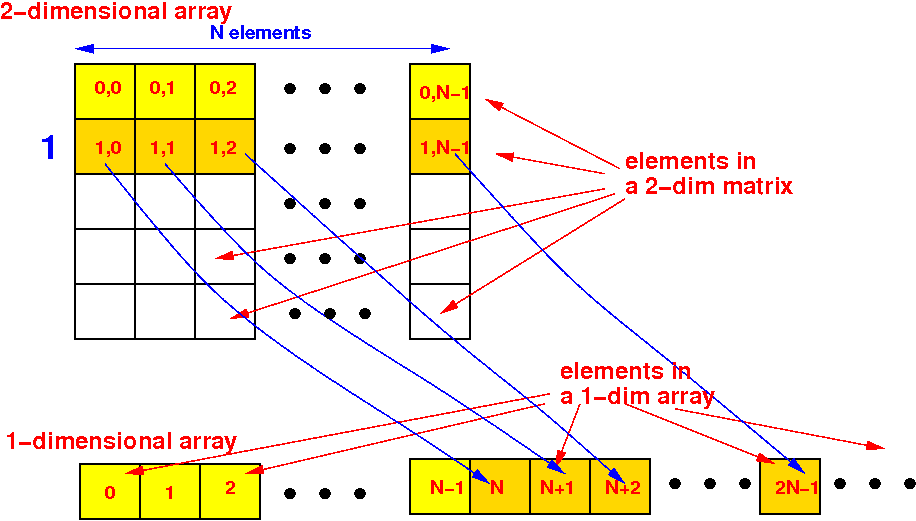

We map the 2nd row of the matrix to the second N elements in 1-dim array:

Relationship: w (1-dim index) = 1×N + j

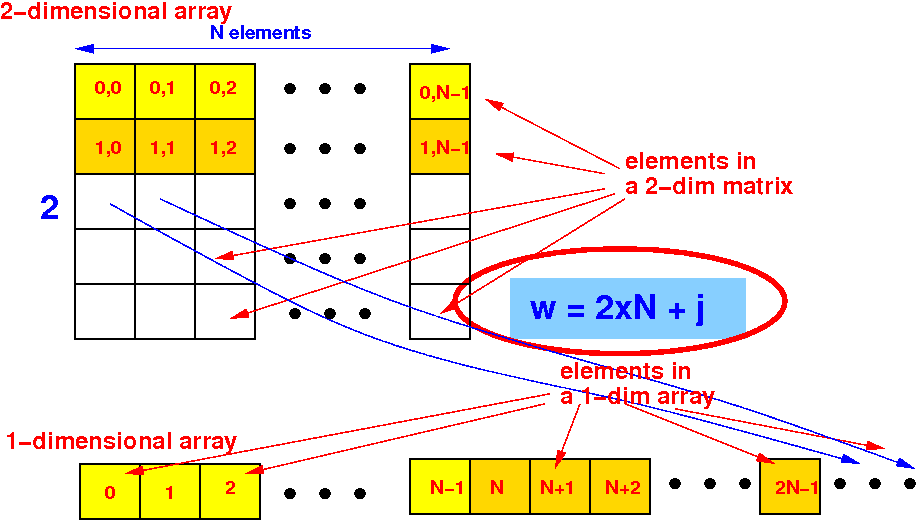

We map the 3rd row of the matrix to the third N elements in 1-dim array:

Relationship: w (1-dim index) = 2×N + j --- and so on..

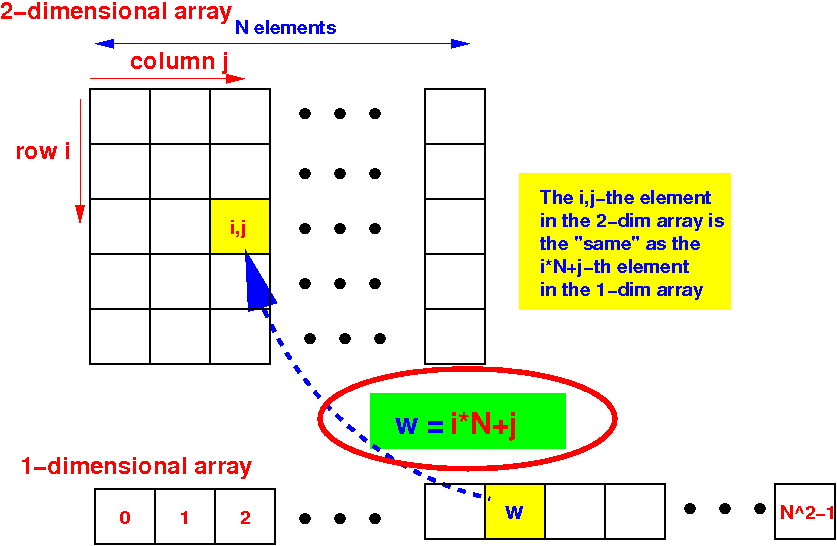

The mapping function from a 2-dimensional index (i,j) to a 1-dimensional index w is:

How to linearize a 2 dim index (i,j) to a 1-dim index w: w (1-dim index) = i×N + j

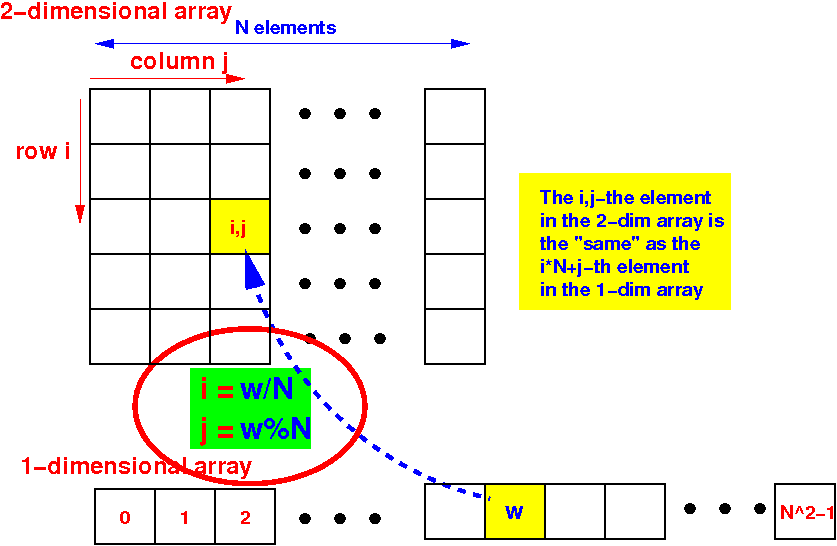

The reverse mapping function from a 1-dimensional index w to a 2-dimensional index (i,j):

w (1-dim index) = i×N + j --- Question: what is i = f(w) and j = g(w) ??

The reverse mapping function from a 1-dimensional index w to a 2-dimensional index (i,j) is:

Relationship: i = w/N and j = w%N

Recall: The matrix multiplication with 2 dimensional arrays

int N = ...; // Some pre-defined value

float A[N][N]; // Matrix 1

float B[N][N]; // Matrix 2

float C[N][N]; // Output matrix

//CPU matrix mult alg for static 2-dimensional arrays

for (int i = 0; i < N; i++)

for (int j = 0; j < N; j++)

{

/* ---------------------------------

Compute the matrix element

Cij = ∑k=0..N-1 AikBkj

--------------------------------- */

C[i][j] = 0.0;

for (int k = 0; k < N; k++)

C[i][j] = C[i][j] + A[i][k]*B[k][j]; // 1 vector product

}

|

We change it to use allocated arrays:

int N = ...; // Some pre-defined value float *A = malloc(N*N*sizeof(float)); // Matrix 1 float *B = malloc(N*N*sizeof(float)); // Matrix 2 float *C = malloc(N*N*sizeof(float)); // Output matrix //CPU matrix mult alg for static 2-dimensional arrays for (int i = 0; i < N; i++) for (int j = 0; j < N; j++) { /* --------------------------------- Compute the matrix element Cij = ∑k=0..N-1 AikBkj --------------------------------- */ C[i][j] = 0.0; for (int k = 0; k < N; k++) C[i][j] = C[i][j] + A[i][k]*B[k][j]; // 1 vector product } |

We map the 2-dim array index [i][j] to a 1-dim index [i*N+j]:

int N = ...; // Some pre-defined value float *A = malloc(N*N*sizeof(float)); // Matrix 1 float *B = malloc(N*N*sizeof(float)); // Matrix 2 float *C = malloc(N*N*sizeof(float)); // Output matrix //CPU matrix mult alg for static 2-dimensional arrays for (int i = 0; i < N; i++) for (int j = 0; j < N; j++) { /* --------------------------------- Compute the matrix element Cij = ∑k=0..N-1 AikBkj --------------------------------- */ C[0*N+0] = 0.0; for (int k = 0; k < N; k++) C[i*N+j] = C[i*N+j] + A[i*N+k]*B[k*N+j]; //1 vec product } |

DEMO: /home/cs355001/demo/CUDA/4-mult-matrix/cpu-mult-matrix.c

Recall that we can create B blocks with T (T ≤ 1024) threads/block:

#include <stdio.h>

#include <unistd.h>

__global__ void hello( )

{

printf("gridDim.x=%d, blockIdx.x=#%d,

blockDim.x=%d, threadIdx.x=#%d -> ID=%d\n",

gridDim.x, blockIdx.x, blockDim.x, threadIdx.x,

blockIdx.x*blockDim.x+threadIdx.x);

}

int main()

{

hello<<< B, T >>>( );

printf("I am the CPU: Hello World ! \n");

cudaDeviceSynchronize();

}

|

Each thread can

compute its

unique ID using

blockIdx.x*blockDim.x+threadIdx.x.

Thread k will

compute

C[i][j]

where i =

k/N and

j =

k%N

The CPU code: (1) allocate arrays and (2) then launches the threads (as <<< B,T >>>):

int main(int argc, char *argv[])

{

int N = Matrix size

}

|

N will be specified by the user input

The variable T is the number of threads in a block:

int main(int argc, char *argv[])

{

int N = Matrix size

int T = # threads per thread block (user choice)

}

|

We can pick a value for T later (with the condition that T ≤ 1024)

We will create N2 threads to perform the matrix multiplication:

int main(int argc, char *argv[])

{

int N = Matrix size

int T = # threads per thread block (user choice)

N*N

}

|

Thread (i,j) will compute C[i][j]

The number of blocks that we must create is:

int main(int argc, char *argv[])

{

int N = Matrix size

int T = # threads per thread block (user choice)

int B = ceil((float) N*N / T ); // # thread blocks needed

}

|

We will create the shared array variables next...

We define the reference variables to help us allocate the shared arrays:

int main(int argc, char *argv[])

{

int N = Matrix size

int T = # threads per thread block (user choice)

int B = ceil((float) N*N / T ); // # thread blocks needed

float *a, *b, *c;

}

|

(This is CUDA syntax... i.e, how CUDA provide the dynamic shared array to us)

Allocate the 3 shared matrices (as 1-dim arrays):

int main(int argc, char *argv[])

{

int N = Matrix size

int T = # threads per thread block (user choice)

int B = ceil((float) N*N / T ); // # thread blocks needed

float *a, *b, *c;

/* =======================================

Allocate 3 shared matrices (as arrays)

======================================= */

cudaMallocManaged(&a, N*N*sizeof(float));

cudaMallocManaged(&b, N*N*sizeof(float));

cudaMallocManaged(&c, N*N*sizeof(float));

// initialize x and y arrays (code omitted)

}

|

(This is CUDA syntax... i.e, how CUDA provide the dynamic shared array to us)

Launch (at least) N2 threads as <<< B,T >>> to perform the matrix multiplication:

int main(int argc, char *argv[])

{

int N = Matrix size

int T = # threads per thread block (user choice)

int B = ceil((float) N*N / T ); // # thread blocks needed

float *a, *b, *c;

/* =======================================

Allocate 3 shared matrices (as arrays)

======================================= */

cudaMallocManaged(&a, N*N*sizeof(float));

cudaMallocManaged(&b, N*N*sizeof(float));

cudaMallocManaged(&c, N*N*sizeof(float));

// initialize x and y arrays (code omitted)

matrixMult<<< B, T >>>(a, b, c, N); // Launch kernel

}

|

We will write the kernel code for matrixMult( ) next...

First, compute a unique ID for each thread within the <<< B, T >>> configuration:

__global__ void matrixMult(float *A, float *B, float *C, int n)

{

int w = blockIdx.x*blockDim.x + threadIdx.x; // w < n2

/* ---------------------------------

Compute the matrix element

Cij = ∑k=0..N-1 AikBkj

--------------------------------- */

}

|

We first find the matrix element C[i][j] (what's i and j ??) that thread w must compute

The thread w on the GPU must compute the element C[w/N][w%N] in the output matrix:

__global__ void matrixMult(float *A, float *B, float *C, int n)

{

int w = blockIdx.x*blockDim.x + threadIdx.x;

int i = w/n; // Row index

int j = w%n; // Column index

/* ---------------------------------

Compute the matrix element

Cij = ∑k=0..N-1 AikBkj

--------------------------------- */

}

|

(We have discussed this mapping in this slide set before)

The algorithm to compute C[i][j] is:

__global__ void matrixMult(float *A, float *B, float *C, int n)

{

int w = blockIdx.x*blockDim.x + threadIdx.x;

int i = w/n; // Row index

int j = w%n; // Column index

/* ---------------------------------

Compute the matrix element

Cij = ∑k=0..N-1 AikBkj

--------------------------------- */

C[i*n+j] = 0.0; // We could use: C[w] = 0.0

for ( int k = 0; k < n; k++ )

C[i*n+j] += A[i*n+k] * B[k*n+j]; // C[i*n+j] = C[w]

}

|

Notice: we created more than N2 threads with <<< B, T >>> !!!

To prevent the computation of non-existing matrix elements by the extra threads, we use:

__global__ void matrixMult(float *A, float *B, float *C, int n)

{

int w = blockIdx.x*blockDim.x + threadIdx.x;

if ( w < n*n )

{

int i = w/n; // Row index

int j = w%n; // Column index

/* ---------------------------------

Compute the matrix element

Cij = ∑k=0..N-1 AikBkj

--------------------------------- */

C[i*n+j] = 0.0; // We could use: C[w] = 0.0

for ( int k = 0; k < n; k++ )

C[i*n+j] += A[i*n+k] * B[k*n+j]; // C[i*n+j] = C[w]

}

}

|

DEMO: /home/cs355001/demo/CUDA/4-mult-matrix/mult-matrix2.cu